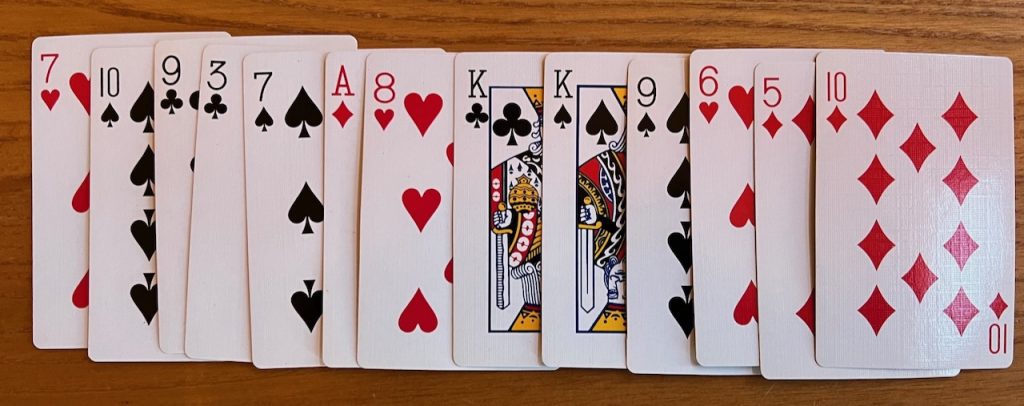

13-card hand dealt “at random”

Introduction

In several articles on this site (see, for instance, A and B), we have commented on the dangers of backtest overfitting in finance.

By backtest overfitting, we mean the usage of historical market data to develop an investment model, strategy or fund, where many variations are tried on the same fixed dataset. Backtest overfitting, a form of selection bias under multiple testing, has long plagued the field of finance and is now thought to be the leading reason why investments that look great when designed often disappoint when actually fielded to investors. Models, strategies and funds suffering from this type of statistical overfitting typically target the random patterns present in the limited in-sample test-set on which they are based, and thus often perform erratically when presented with new, truly out-of-sample data.

The potential for backtest overfitting in the financial field has grown enormously in recent years with the increased utilization of computer programs to search a space of millions or even billions of parameter variations for a given model, strategy or fund, and then to select only the “optimal” choice for publication or market implementation. The sobering consequence is that a significant portion of the models, strategies and funds employed in the investment world, including many of those marketed to individual investors, may be merely statistical mirages.

As an illustration, in this AMS Notices article, the authors show that if only five years of daily stock market data are available as a backtest, then no more than 45 variations of a strategy should be tried on this data, or the resulting strategy will be overfit, in the specific sense that the strategy’s Sharpe Ratio is likely to be 1.0 or greater just by chance (even though the true Sharpe Ratio may be zero or negative).

Some commonly used techniques to compensate for backtest overfitting, if not used correctly, are themselves subject to overfitting. One example is the “hold-out method” — developing a model or investment fund based on a backtest of a certain date range, then checking the result with a different date range. However, those using the hold-out method may iteratively tune the parameters for their model until the score on the hold-out data, say measured by a Sharpe ratio, is impressively high. But these repeated tuning tests, using the same fixed hold-out dataset, are themselves tantamount to backtest overfitting.

For additional details, see How backtest overfitting in finance leads to false discoveries, which appeared in the December 2021 issue of the British journal Significance. This article is condensed from the following manuscript, which is freely available from SSRN: Finance is Not Excused: Why Finance Should Not Flout Basic Principles of Statistics.

The post-hoc probability fallacy

Backtest overfitting can also be seen as an instance of the post-hoc probability fallacy — calculating a probability or statistical score based on a fixed limited dataset, after the fact, and then claiming a remarkable result. This is equivalent to dealing a nondescript hand of cards, such as the one pictured in the figure above, then calculating its probability after the fact (it is approximately one in 4 x 1021), and then claiming a remarkably improbable result. In reality, of course, there is nothing particularly remarkable about this hand of cards at all — the post-hoc probability calculation is completely misleading.

One example of the post hoc probability fallacy in biology can be seen in the attempt by some evolution skeptics to claim that the human alpha-globin molecule, a component of hemoglobin that performs a key oxygen transfer function in blood, could not have arisen by “random” evolution. These writers argue that since human alpha-globin is a protein chain based on a sequence of 141 amino acids, and since there are 20 different amino acids common in living systems, the probability of selecting human alpha-globin “at random” is one in 20141, or one in approximately 10183. This probability is so tiny, so they argue, that even after millions of years of random molecular trials, no human alpha-globin protein molecule would ever appear.

But this line of reasoning is a dead-ringer for the post-hoc probability fallacy. Note that the probability calculation was done after the fact, on a single fixed dataset, namely the human alpha-globin sequence, which certainly did appear in the course of evolution. Further, unlike the card example above where one can correctly calculate the probability of any specific hand (because all such hands have the same probability), in this case there is no possible way to know whether some sequences are more likely than others, or any other way to rationally calculate a reliable probability. It may well be that there is nothing special whatsoever about the human alpha-globin sequence, as evidenced, for example, by the great variety in alpha-globin molecules seen across the biological kingdom, all of which perform a similar oxygen transfer function. In any event, it is clear that the impressive-sounding probability figure claimed by these writers is nothing but a statistical mirage — a vacuous arithmetic exercise — with no foundation in real empirical biology.

Pinker on the post-hoc probability fallacy

A good introduction to the post-hoc probability fallacy for the general reader can be found in social scientist Steven Pinker’s new book Rationality: What It Is, Why It Seems Scarce, Why It Matters. He likens the post-hoc probability fallacy to the following joke:

A man tries on a custom suit and says to the tailor, “I need this sleeve taken in.” The tailor says, “No, just bend your elbow like this. See, it pulls up the sleeve.” The customer says, “Well, OK, but when I bend my elbow, the collar goes up the back of my neck.” The tailor says, “So? Raise your head up and back. Perfect.” The man says, “But now the left shoulder is three inches lower than the right one!” The tailor says, “No problem. Bend at the waist and then it evens out.” The man leaves the store wearing the suit, his right elbow sticking out, his head craned back, his torso bent to the left, walking with a herky-jerky gait. A pair of pedestrians pass him by. The first says, “Did you see that poor disabled guy? My heart aches for him.” The second says, “Yeah, but his tailor is a genius — the suit fits him perfectly!”

Pinker also cites this financial example:

An unscrupulous investment advisor with a 100,000-person mailing list sends a newsletter to half of the list predicting that the market will rise and a version to the other half of the list predicting that it will fall. At the end of every quarter he discards the names of the people to whom he sent the wrong prediction and repeats the process with the remainder. After two years he signs up the 1,562 recipients who are amazed at his track record of predicting the market eight quarters in a row.

Who is laughing now?

We may chuckle at such examples. An investment advisor pulling the caper above would certainly lose their license, if not be subject to criminal prosecution.

But consider this: Why is someone who designs an investment model, strategy or fund, but fails to account for and properly report the millions or billions of alternate parameter settings explored during the tuning process, any different from our unscrupulous investment advisor who did not inform his final clients of the many other mistaken predictions? Both are failing to disclose to the end-user investors the many trials that were not ultimately selected. Both may be the cause of needless disappointment if the resulting investments fall flat. There is no fundamental difference.

So who is laughing now?